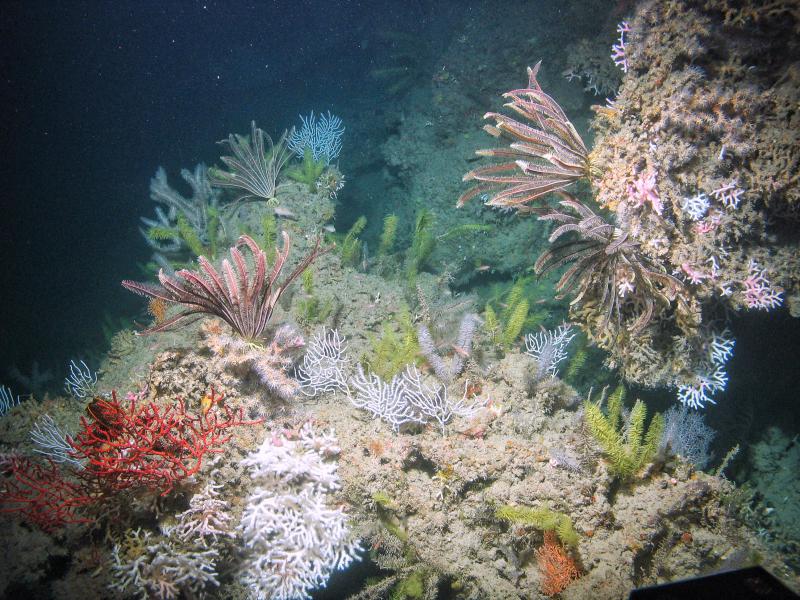

Vital seafloor habitats in the mesophotic and deep benthic regions of the Gulf of Mexico were injured by the 2010 Deepwater Horizon oil spill. NOAA, the Department of the Interior, and partners are working across four interconnected projects to restore them. In the effort to map and evaluate these seafloor communities that are deeper than 50 meters below the surface, restoration experts are using several technologies, including underwater surveys conducted by remotely operated vehicles (ROVs) with a video camera attached, recording hundreds of hours of video each field season. To better understand and restore these habitats, scientists comb over every frame of video and annotate target species of coral found in each frame – but this requires a lot of time and effort!

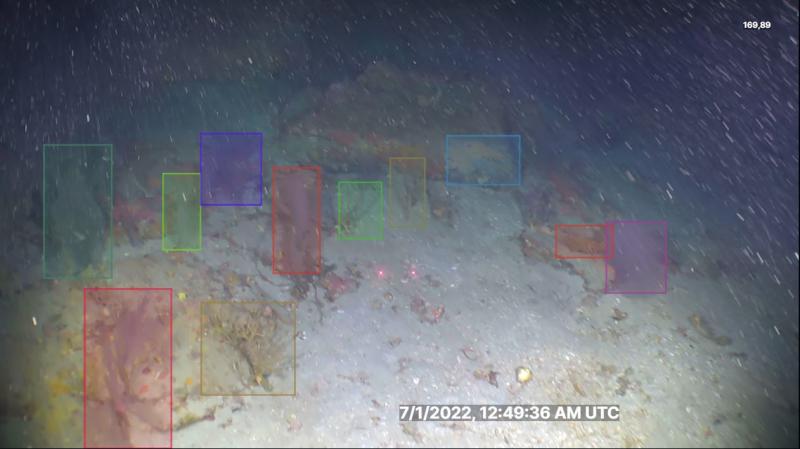

Now, our scientists are exploring the use of Artificial Intelligence (AI) as a method for automatically localizing and labeling specific species of coral within the many hours of ROV videos compiled by these projects. Hosted by Zooniverse, the Click-a-Coral project asks volunteers to label and identify seven species of coral seen in underwater photos collected in the mesophotic and deep benthic regions of the Gulf of Mexico. Creating bounding boxes around species of coral is a crucial step in training AI to automate the process of labeling and identifying coral species in future videos, and we’re asking for volunteers to help achieve this goal.

Volunteer contributions to the Click-a-Coral project will help NOAA scientists and partners build a training dataset to help AI automatically recognize coral found in these images, and significantly speed up the process of labeling and identifying coral in future videos. Instead of manually reviewing hundreds of hours of footage, scientists can quickly review the AI-generated annotations, correct any errors, and focus their efforts on more critical tasks like restoration efforts, scientific studies, and data analysis.

By automating the labeling and identification process using bounding boxes and AI, the restoration efforts of these projects will be more efficient and data-driven. The data generated from this project can also inform future natural resource management and protection actions.