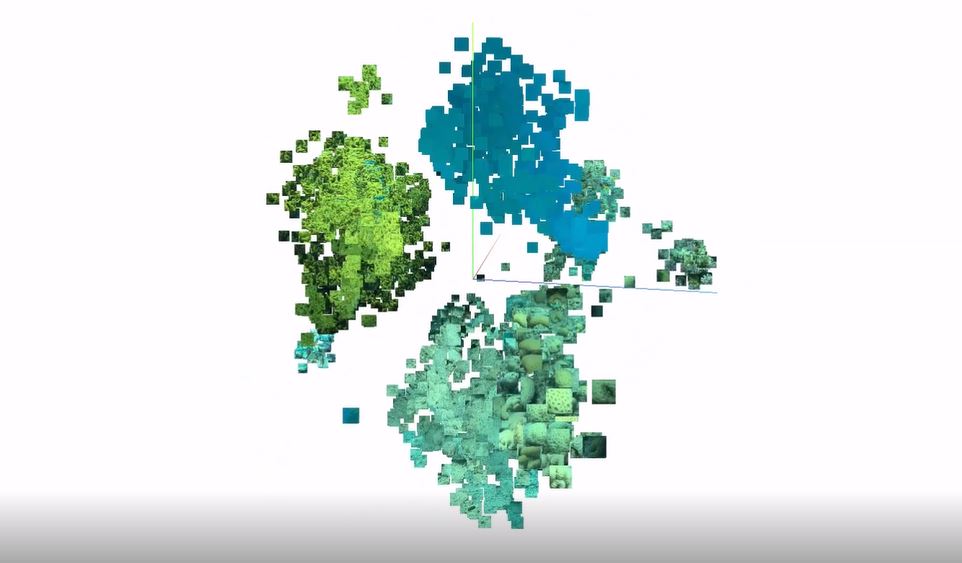

An oldy but a goody: here we’re showing images from coral reef quadrat surveys in (reduced) feature space.

An oldy but a goody: here we’re showing images from coral reef quadrat surveys in (reduced) feature space.

A convolutional neural network is first trained on images, then the encoder of the network is then used as a feature extractor. The “feature” for each image is 1 by N vector, typically 1000’s of dimensions long. Of course, we have no way of visualizing these features and corresponding images in true feature space, but we can use different feature reduction / clustering algorithms like PCA and T-SNE to reduce and visualize in a 3-D Cartesian coordinate system.

This is a sample dataset consisting of only four very distinct class categories, with the trained model’s accuracy being close to 100%; The clear groupings / clusters of features validate this.

Although this tool doesn’t seem to be popular anymore, it’s still interesting to watch and get insight on the features learned by a trained model. It could also be used to identify harder or edge-case samples by providing the associated loss for each sample and filtering, or spotlight mislabeled data.

This work is helpful in supporting projects like Mission: Iconic Reefs.

https://www.fisheries.noaa.gov/southeast/habitat-conservation/restoring-seven-iconic-reefs-mission-recover-coral-reefs-florida-keys